Recently, I was looking into the internals of the Visual Studio debugger for the .NET Diagnostics Expert course. I was especially interested in how the Docker debugging works. For those of you who haven’t tried it yet, let me provide a concise description.

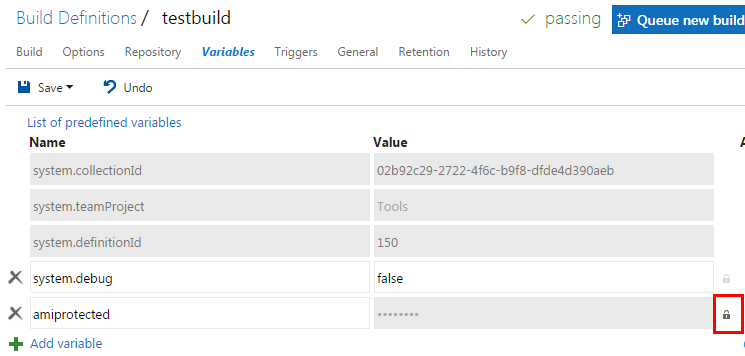

In Visual Studio 2019, when we work on the ASP.NET Core project, it is possible to create a launch profile that points to a Docker container, for example:

And that’s fantastic as we can launch the container directly from Visual Studio. And what’s even better, we can debug it! To make this all work, Visual Studio requires a Dockerfile in the root project folder. The default Dockerfile (which you can create in the ASP.NET Core application wizard) looks as follows:

FROM mcr.microsoft.com/dotnet/core/aspnet:3.1-buster-slim AS base

WORKDIR /app

EXPOSE 80

EXPOSE 443

FROM mcr.microsoft.com/dotnet/core/sdk:3.1-buster AS build

WORKDIR /src

COPY ["WebApplication1.csproj", ""]

RUN dotnet restore "./WebApplication1.csproj"

COPY . .

WORKDIR "/src/."

RUN dotnet build "WebApplication1.csproj" -c Release -o /app/build

FROM build AS publish

RUN dotnet publish "WebApplication1.csproj" -c Release -o /app/publish

FROM base AS final

WORKDIR /app

COPY --from=publish /app/publish .

ENTRYPOINT ["dotnet", "WebApplication1.dll"]

And that’s it. If we press F5, we land inside an application container, and we can step through our application’s code. It all looks like magic, but as usual, there are protocols and lines of code that run this machinery behind the magical facade. And in this post, we will take a sneak peek at them 😊.

Continue reading